Update, June 25, 2024: This blog post series is now also available as a book called Fundamentals of DevOps and Software Delivery: A hands-on guide to deploying and managing production software, published by O’Reilly Media!

This is Part 10 of the Fundamentals of DevOps and Software Delivery series. In Part 9, you learned how to store, query, replicate, and back up your data. That blog post focused primarily on data about your customers, such as user profiles, purchases, photos, and so on. This blog post will focus primarily on data that gives you visibility into your business, or what is typically referred to as monitoring.

In the Preface, you heard about LinkedIn’s software delivery struggles. Here’s one more: we had virtually no monitoring. We did collect metrics and logs, but the tools to understand that data were unusable. Translation: we were flying blind. Bugs and outages could go unnoticed for days, sometimes until users complained loudly enough that we took notice. In 2010, an intern created inGraphs, a UI for visualizing our metrics. It had a profound impact on the company, as suddenly, we could spot problems earlier and understand what users were doing. We overhauled monitoring even more as part of Project Inversion, and before long, inGraphs was on TVs all over the office. As David Henke, LinkedIn’s SVP of Engineering and Operations, liked to say: if you can’t measure it, you can’t fix it.

So, what should you measure? The following four items are the most commonly-used monitoring tools and techniques:

-

Logs

-

Metrics

-

Events

-

Alerts

This blog post will dive into each of these topics, and as you go through them, you’ll try out a number of examples, including using structured logging in Node.js, creating a dashboard of EC2 metrics in CloudWatch, and configuring Route 53 health checks with alerts to notify you if your service is down. Let’s get started by looking at logs.

Logs

// How real programmers debug

console.log("*************************** here")Almost every piece of software writes logs, which are records or messages describing what’s happening in that

software: e.g., interesting events, errors, debugging information, and so on. Interactive CLI tools typically write

log messages directly to the terminal (to the stdout and stderr streams); other types of software typically write

log messages to files. These log messages are useful for a variety of purposes:

- Debugging problems

-

When something goes wrong, the logs are usually the first thing you check to understand what’s going on. If you do a good job with logging, you’ll be able to find the events that led up to the error, and sometimes, you’ll have an error message and even a stack trace (the sequence of nested function calls that led to the error) that shows the exact line of code causing the problem.

- Analyzing your software

-

Analyzing log files can provide a number of insights about user behavior, performance bottlenecks, and other aspects of your systems.

- Auditing your software

-

Logs provide an audit trail of the events in your system, which can be useful for compliance and security (e.g., to investigate a security incident).

|

Key takeaway #1

Add logging throughout your code to give you visibility into what’s happening in your systems. |

There are logging libraries in virtually every programming language, such as Log4j

for Java, Winston for JavaScript, zap for Go, and

Loguru for Python. These libraries provide the following benefits over console.log

(or its equivalent in your programming language):

-

Log levels

-

Log formatting

-

Multiple loggers

-

Structured logging

-

Log files and rotation

-

Log aggregation

The following sections will look at each of these items. Logging is easier to show than to explain, so rather than a single example section, I’ve included example code snippets in each of these sections. Let’s start with log levels.

Log Levels

Most logging libraries support multiple log levels, which allow you to specify the severity of each log message. Table 21 shows the typical log levels you’ll see in most libraries (sometimes with slightly different names), ordered from least to most severe:

| Level | Purpose | Example |

|---|---|---|

Trace | Detailed, fine-grained visibility into the step-by-step execution path of the code. | Add trace logging to the entrypoint of every function to show the full code execution path. |

Debug | Diagnostic information that may be useful in debugging and troubleshooting. | Add debug logging to write out the contents of a data structure to help understand a bug. |

Info | Important information you want to capture for every user and request. | Add info logging to record each time a user completes a purchase successfully. |

Warn | Unexpected problems that do not cause the software to fail. | Log a warning when the database is missing some data, but you have a fallback you can use. |

Error | Errors that cause some part of the software to fail. | Log an error when the database is missing some data that causes a feature to not work at all. |

Fatal | Errors that cause the software to fail entirely and need to be addressed immediately. | Log a fatal error when the database is completely down and you can’t serve any requests. |

Example 179 shows how to use the Winston library to do logging in JavaScript:

const logger = winston.createLogger({ (1)

level: 'info', (2)

format: winston.format.simple(), (3)

transports: [new winston.transports.Console()] (4)

});

logger.info('Hello, World!'); (5)Here’s what this code does:

| 1 | Create a new instance of the logging library. |

| 2 | Set the log level to info. |

| 3 | Use Winston’s "simple" formatter. You’ll learn more about log formatting later. |

| 4 | Output the logs to the terminal. |

| 5 | Use the logging library instance to log "Hello, World!" at info level. |

If you run this code, you’ll see:

info: Hello, World!This might not seem like a big improvement over using something like console.log, but using a logging library with

log levels gives you a few advantages right away. First, just about all logging libraries allow you to specify the

minimum log level, and they will automatically discard any messages that have a lower severity. For example, consider

what happens when you have the log statements shown in Example 180:

logger.debug('A message at debug level');

logger.info('A message at info level');

logger.error('A message at error level');When you run this code, you’ll see the following output:

info: A message at info level

error: A message at error levelNotice how the debug message is missing. Since the logger in Example 179 sets the log level to info, only messages at info and above are going to be recorded. Log levels allow you to add lots of trace and debug logging throughout your code without making the logs too noisy during production usage, while still having the ability to use a lower log level in dev, or even in prod (temporarily) while troubleshooting.

Log levels also make it easier to scan the logs, both visually, and with tools such as grep. For example, if you had

a log file with thousands of lines of logs, you could use grep to filter the log to just the error

messages as shown in Example 181:

grep to filter a log file for errors$ grep '^error:' log.txt

error: Example error message.

error: Another example of an error message.Log levels are only one of the benefits you get from using a logging library. Another big benefit is the ability to standardize the format of all your log messages, which can make it even easier to read and scan your logs, as discussed in the next section.

Log Formatting

Just about all logging libraries allow you to define the format to use for your log messages. Example 182 shows how to apply basic formatting to a Winston logger:

const logger = winston.createLogger({

level: 'info',

format: winston.format.combine( (1)

winston.format.timestamp(), (2)

winston.format.printf(({timestamp, level, message}) => (3)

`${timestamp} [${level}]: ${message}`

),

),

transports: [new winston.transports.Console()]

});

logger.info('A message at info level');

logger.warn('A message at warn level');

logger.error('A message at error level');The preceding code applies the following formatting to each log message:

| 1 | Instead of using the simple formatter, create a custom format from (2) and (3). |

| 2 | Include the timestamp in each log message. |

| 3 | Show the timestamp, followed by the log level, followed by the log message. |

If you run this code, you’ll see the following:

2024-10-05T20:17:49.332Z [info]: A message at info level

2024-10-05T20:17:49.332Z [warn]: A message at warn level

2024-10-05T20:17:49.332Z [error]: A message at error levelUsing log formatting ensures every message uses the same standardized pattern, which makes it easier to read and parse the logs. It also allows you to include useful contextual information, often referred to in logging libraries as metadata, to help you understand what was happening when each message was logged. The timestamp is one useful piece of contextual information; Example 183 shows a few other pieces of useful context:

const logger = winston.createLogger({

level: 'info',

defaultMeta: req, (1)

format: winston.format.combine(

winston.format.timestamp(),

winston.format.printf(({timestamp, ip, method, path, level, message}) => (2)

`${timestamp} ${ip} ${method} ${path} [${level}]: ${message}`

),

),

transports: [new winston.transports.Console()]

});

logger.info('A message at info level');

logger.warn('A message at warn level');

logger.error('A message at error level');This code has two changes:

| 1 | Add the request object (e.g., from Express.js) as contextual data. |

| 2 | Update the log format to include the user’s IP, the HTTP method, and the HTTP path from the request object. |

When you run this code, you see the following:

2024-10-05T20:17:49.332Z 1.2.3.4 GET /foo [info]: A message at info level

2024-10-05T20:17:49.332Z 1.2.3.4 GET /foo [warn]: A message at warn level

2024-10-05T20:17:49.332Z 1.2.3.4 GET /foo [error]: A message at error levelNow, each log message tells a little self-contained story: at this time, a certain user sent an HTTP request for a specific path, and here’s a message about it, at a specific severity. These stories become even more useful if you use multiple loggers.

Multiple Loggers

Most logging libraries allow you to create multiple loggers, each with their own name. Example 184 shows how you can create multiple Winston loggers:

const createLogger = (name, level) => { (1)

return winston.createLogger({

level: level,

defaultMeta: req,

format: winston.format.combine(

winston.format.timestamp(),

winston.format.label({label: name}), (2)

winston.format.printf(

({timestamp, label, ip, method, path, level, message}) => (3)

`${timestamp} [${label}] ${ip} ${method} ${path} [${level}]: ${message}`

),

),

transports: [new winston.transports.Console()]

});

};

const loggerA = createLogger('A', 'info'); (4)

const loggerB = createLogger('B', 'warn'); (5)

loggerA.info('A message at info level');

loggerB.info('A message at info level');

loggerA.warn('A message at warn level');

loggerB.warn('A message at warn level');This code does the following:

| 1 | createLogger creates a logger with the given name and level. |

| 2 | In Winston, the convention for naming a logger is to set it as a label. |

| 3 | Update the log format to include the label (the logger name) in brackets. |

| 4 | Create a logger for module A, set to info level. |

| 5 | Create a logger for module B, set to warn level. |

The output from the code is as follows:

2024-10-05T20:19:43.040Z [A] 1.2.3.4 GET /foo [info]: A message at info level

2024-10-05T20:19:43.040Z [A] 1.2.3.4 GET /foo [warn]: A message at warn level

2024-10-05T20:19:43.040Z [B] 1.2.3.4 GET /foo [warn]: A message at warn levelUsing multiple loggers allows you to see what part of your code is doing the logging. It also allows you to configure different logging settings (such as different log levels) for different parts of your code, so if you’re seeing strange behavior in one subsystem, you can turn up logging just for that part of the code, or if another subsystem is spamming the logs too much, you can turn down the log level for just that subsystem. You can also choose to include different contextual data with the logs for each subsystem, making the self-contained stories more relevant to their particular domain.

One trend you may have noticed is that the logs become more useful as you add more and more "metadata" to them: e.g., IP address, module name, timestamp, and so on. This evolves into structured logging, as described in the next section.

Structured Logging

For years, logging text strings and defining fancy formats was the gold standard. Unfortunately, the result was that every piece of software, every library, every language, and every developer formatted their logs a bit differently. This made it hard to build tooling that could automatically parse, search, and filter logs.

In recent years, there has been a push to move to structured logging, where you output logs in a well-defined data format such as JSON or XML, rather than as arbitrary strings. This makes it easier to build a variety of tooling that can parse and interpret the logs. Example 185 shows how to configure the Winston to output logs as JSON:

const createLogger = (name, level) => {

return winston.createLogger({

level: level,

defaultMeta: req,

format: winston.format.combine(

winston.format.timestamp(),

winston.format.label({label: name}),

winston.format.json() (1)

),

transports: [new winston.transports.Console()]

});

};

const loggerA = createLogger('A', 'info');

loggerA.info('A message at info level');There’s only one change from the previous example:

| 1 | Switch from the custom printf-based format to JSON formatting. |

Here’s the output from this code (note, the output has been reformatted to fit this blog post series; it would normally all be on one line):

{

"ip":"1.2.3.4",

"label":"A",

"level":"info",

"message":"A message at info level",

"method":"GET",

"path":"/foo",

"timestamp":"2024-10-05T20:21:49.231Z"

}The advantage of structured logging is that it is both human-readable—arguably, it’s more readable than the raw text format, as every field has a clear label—and machine-readable. It’s now easier to build tooling to parse, search, filter, and analyze logs. Just as importantly, structured logging changes how you think about logging from just plain-text messages to well-defined structures, as shown in Example 186:

loggerA.info({

request_id: req.id,

user_id: user.id,

action: "complete-purchase",

product_id: product.id,

product_price: product.price,

message: `User bought ${product.name}`

});The preceding code produces the following output (again, reformatted to multiple lines to fit in this blog post series):

{

"action":"complete-purchase",

"id":"53ebcb5a-038d-4e6a-a171-7132000c68fd",

"ip":"1.2.3.4",

"label":"A",

"level":"info",

"message":"User bought Fundamentals of DevOps and Software Delivery",

"method":"GET",

"path":"/foo",

"product_id":1098174593,

"product_price":"$54.99",

"request_id":"53ebcb5a-038d-4e6a-a171-7132000c68fd",

"timestamp":"2024-10-05T20:21:49.231Z",

"user_id":53345644345655

}Instead of thinking of arbitrary strings with a little bit of relevant information, you start thinking of logging as a way to capture as much context as you can, in the form of key-value pairs, about what’s happening. As you’ll see later in this blog post, these ideas are closely related to the shift to structured events, observability, and tracing.

So far, all the examples in this blog post have logged directly to the terminal, but most software stores logs in files, as per the next section.

Log Files and Rotation

Logging to the terminal (to stdout and stderr streams) is great in the development environment, when there’s a

person looking directly at the terminal, but it’s not as useful in a server environment. Therefore, outside of an

interactive terminal session, most software writes log data to files. This way, your log history is persisted, so you

can go back any time, and see what happened in the past. Example 187 shows an example of how to send the log

output to a file:

const createLogger = (name, level) => {

return winston.createLogger({

level: level,

defaultMeta: req,

format: winston.format.combine(

winston.format.timestamp(),

winston.format.label({label: name}),

winston.format.json()

),

transports: [

new winston.transports.Console(),

new winston.transports.File({ (1)

filename: `module${name}.log`

})

]

});

};

const loggerA = createLogger('A', 'info');

const loggerB = createLogger('B', 'info');

loggerA.info('A message at info level');

loggerB.info('A message at info level');There is only one change:

| 1 | Log to a file named "module-", followed by the given logger name. |

When you run this code, you end up with two files on disk, moduleA.log and moduleB.log. moduleA.log will have just the logs from module A and moduleB.log will have just the logs from module B. Now you can check the file for the module you’re interested in and use the timestamps to go back to whatever point in time you need.

Note that, over time, log files can become huge. To ensure the files don’t become so large that it’s too hard to browse them, and to prevent running out of disk space, you should typically configure log rotation, where you set up an automated process to rename, compress, and possibly delete log files once they hit a certain file size or age. On most Linux systems, you can use logrotate to do this for you. Alternatively, some logging libraries have log rotation features built-in, as shown in Example 188:

const createLogger = (name, level) => {

return winston.createLogger({

level: level,

defaultMeta: req,

format: winston.format.combine(

winston.format.timestamp(),

winston.format.label({label: name}),

winston.format.json()

),

transports: [

new winston.transports.Console(),

new winston.transports.File({

filename: `module${name}.log`,

maxsize: 10000000, (1)

maxFiles: 10, (2)

zippedArchive: true (3)

})

]

});

};This code uses the Winston library to do the following:

| 1 | Rotate log files that exceed 10 MB. The older log data will be moved to a file with a counter added as a suffix: e.g., moduleA1.log, moduleA2.log, etc. |

| 2 | Once you exceed 10 log files, delete the oldest one. This ensures you’re never storing more than 100 MB of log data. |

| 3 | Compress the older log files, so they take up less disk space. That means the older log files will actually be called moduleA1.log.gz, moduleA2.log.gz, and so on. |

In addition to configuring log rotation to ensure your servers don’t run out of disk space, you should also configure log aggregation, as discussed in the next section.

Log Aggregation

If your software is on a single server, looking at its log files is no problem. But when you run your software across dozens or hundreds of different servers, any one of which may contain the log messages you need, finding the right log file can be a significant challenge. The solution is to use log aggregation tools that send logs from all of your servers to a single, central destination that can store the log data and provide an interface to analyze (search and filter) that data.

Some of the major players in the log storage and analysis space include Loggly, Papertrail, Sumo Logic, Splunk, Graylog, Elasticsearch (with Logstash) / OpenSearch (with OpenSearch Logstash), Datadog, New Relic, Amazon CloudWatch Logs, Google Cloud Logging, and Azure Monitor Logs. Most of these tools provide their own custom data collector tools that you can run on your servers to ship logs to the central data store. There are also several data-store agnostic data collector tools that can ship logs to many different destinations, including Syslog, Fluentd, Fluent Bit, Beats, Vector, and OpenTelemetry Collector (you’ll learn more about OpenTelemetry later in this blog post).

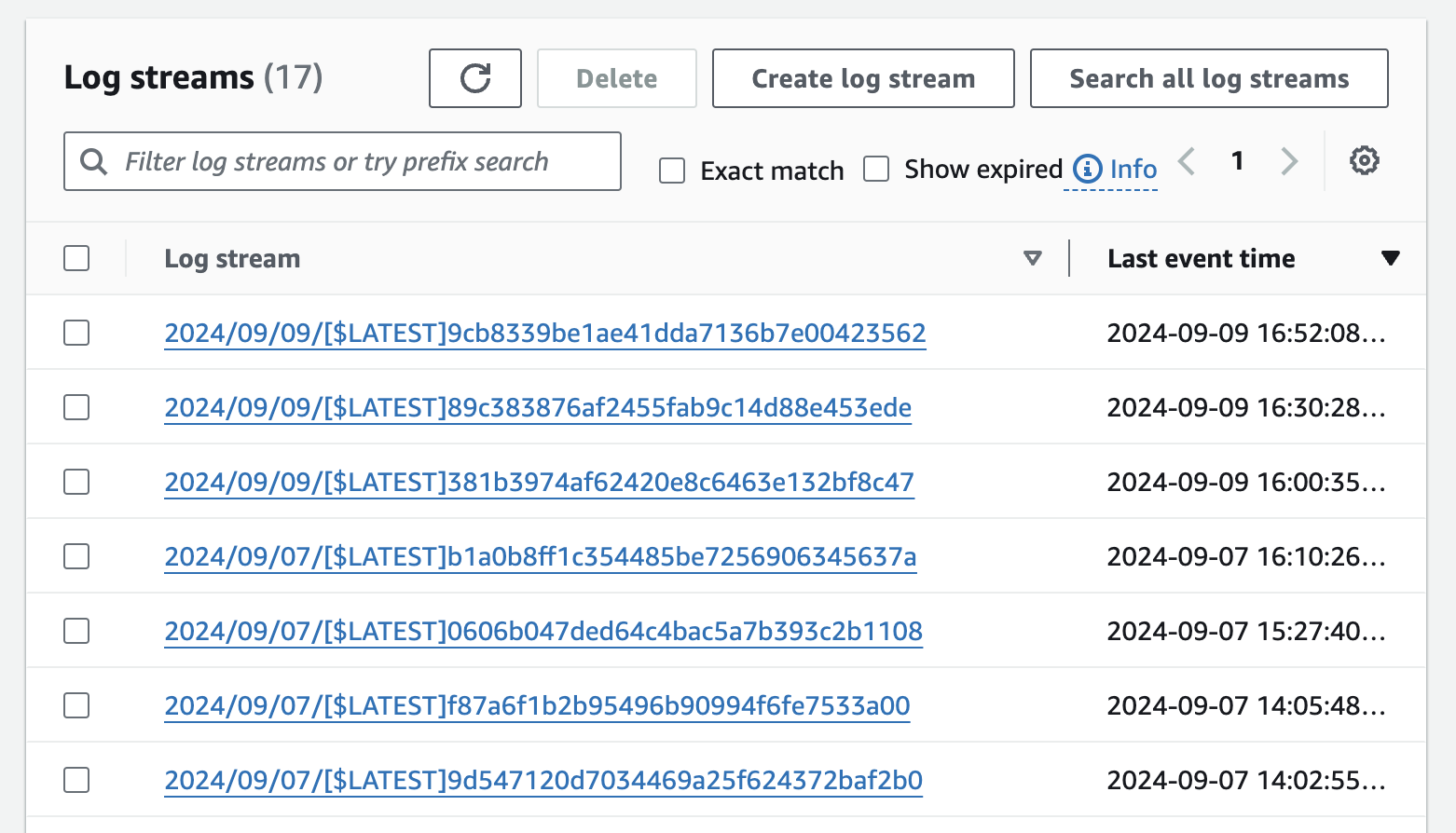

For example, the Lambda functions you deployed in previous blog posts automatically aggregate their

logs in CloudWatch Logs. Logs in CloudWatch are organized into log groups, which for a Lambda function named

function-name will end up being a log group named /aws/lambda/<function-name>. If you head to the

CloudWatch Logs Console, search for the name

of a Lambda function, such as "lambda-rds-app" from Part 9, and click on its log group

(/aws/lambda/lambda-rds-app), you’ll see all the log streams that represent the different instances of that Lambda

function, as shown in Figure 108:

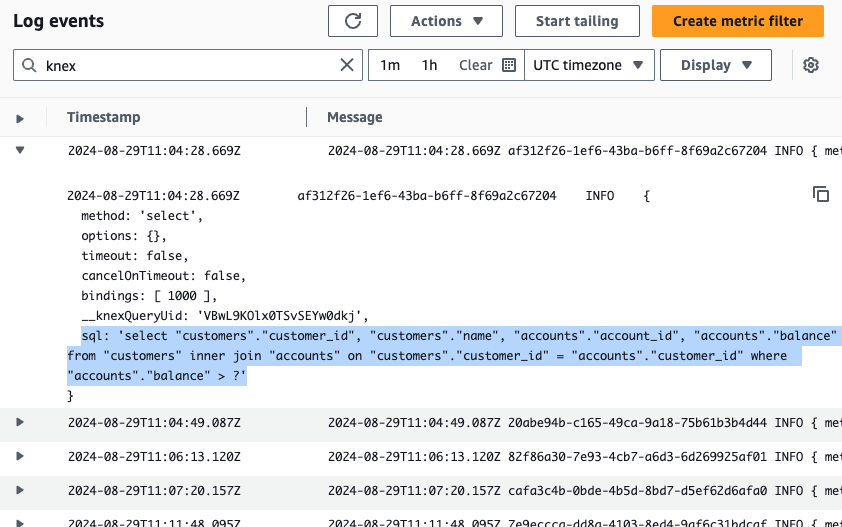

lambda-rds-app Lambda functionEven though this Lambda function has had many instances, and these may have been deployed across many different servers, all the logs are aggregated into CloudWatch Logs, so you can see and search them in a single place. If you click the "Search all log streams" button in the top-right corner, you can search across all your logs, as shown in Figure 109:

Try searching for a term such as "knex," since the lambda-rds-app used Knex.js to access the database. If you see

some results with database queries, congrats, you’re seeing log aggregation in action! Note that Lambda also

automatically includes contextual information in each log message, such as timestamp and request ID.

|

Get your hands dirty

Here are a few exercises you can try at home to go deeper:

|

Log aggregation has several major advantages:

- A single pane of glass

-

You can search all your logs, across all your servers, in a single place.

- Powerful analysis tools

-

If you’re looking at log files directly on a server, you’re typically limited to using tools such as

grepfor search. Most log aggregation tools have more powerful analysis tools: e.g., search (e.g., by keyword), filters (e.g., by timestamp), visualizations, historical comparisons, automatic pattern detection, etc. - Efficient storage

-

Whereas on app servers, you typically need to rotate and eventually delete log files, most log aggregation tools are designed to store massive amounts of log data in an efficient manner.

|

Key takeaway #2

Use log levels, log formatting, multiple loggers, structured logging, log file rotation, and log aggregation to make your logging more effective. |

Logs are often your first step when you’re investigating a problem. But how do you know a problem happened in the first place? You could wait for users to complain, but a better way to go about it is to rely on metrics, as discussed in the next section.

Metrics

Metrics are quantitative measurements of important aspects of your software. Collecting, analyzing, and visualizing metrics gives you valuable insights into your software, including the following:

- Detect problems

-

Metrics are often how you discover problems in your systems. For example, a sudden spike in latency metrics is typically an early warning sign of a problem.

- Understand user behavior

-

Metrics can help you understand how your users are using your products, what’s working, and what’s not. For example, most websites track page view and monthly active user (MAU) metrics to get an understanding of user engagement.

- Improve system performance

-

Metrics can help you identify bottlenecks in your software and to improve latency, throughput, resource usage, and so on. For example, tracking garbage collector (GC) metrics for your Java apps can help you tune your GC settings to minimize stop-the-world pauses.

- Improve team performance

-

Metrics can also give you insight into bottlenecks in how you build your software, allowing you to improve the performance of your team. For example, many companies try to track and improve DORA metrics (which you saw in Part 1) such as deployment frequency, lead time, and recovery time.

Metrics are a key source of continuous feedback that allows for continuous improvement, two central tenets of the DevOps and Agile movements.

|

Key takeaway #3

Use metrics to detect problems, understand user behavior, improve product and team performance, and more generally, as a mechanism for continuous feedback and improvement. |

Here are the topics the following sections will cover:

-

Types of metrics

-

Using metrics

-

Example: metrics in CloudWatch

Let’s start by looking at the different types of metrics.

Types of Metrics

Broadly speaking, you can categorize metrics into the following buckets:

-

Availability metrics

-

Business metrics

-

Application metrics

-

Server metrics

-

Team metrics

The following sections will dig into each of these, starting with availability metrics.

Availability metrics

Availability, or whether users can access and use your product, is the most basic metric that every company should measure. From the user’s perspective, this is largely a "yes" or "no" question: either your service is available or it’s not. At this level, the cause of the outage—e.g., a server crash, a load balancer misconfiguration, an overloaded database—doesn’t matter. All that you’re tracking is whether you’re up or down, and if you’re down, your operations and dev teams will want to know immediately so you can resolve the issue.

To monitor your product’s availability, you need monitoring tools that test the interface used by your customers: e.g., if your product is a website, you need a monitoring tool that regularly sends HTTP requests to various endpoints, and checks it gets an expected response within a certain amount of time. You can set up your own tooling to monitor availability, though the same problems that take down your product (e.g., a data center outage) could also break your availability tooling. A better option may be to use third-party services that can test your service from multiple locations around the world. Some of the major players in this space include Pingdom, Uptime Robot, Better Stack, Uptime.com, Datadog Uptime Monitoring, Amazon Route 53 Health Checks, and Google Cloud Uptime Checks.

These products can gather availability metrics over a long time, giving you a measure of your service’s reliability, which is often reduced to an order of magnitude based on the number of nines. For example, a service with 99.9% reliability is said to have "three nines" and a service with 99.999% reliability is said to have "five nines." Many organizations set goals for how many nines of reliability they will provide, and some companies will include service level agreements (SLAs) in the contracts they sign with customers where they legally commit to one or more reliability goals, known as service level objectives (SLOs). For example, one of the SLOs might be five nines of reliability, with monetary penalties if you fail to achieve that SLO.

Note that 100% availability is not a realistic, practical, or useful target for any real-world SLO. There are three primary reasons for this. The first reason is that each additional nine of reliability is exponentially harder and more expensive to achieve, so you’ll run out of time and money long before you get anywhere near 100%. To put this into perspective, Table 22 shows how much downtime you’re allowed per day, week, month, and year versus how many nines of reliability you’re targeting:

| Downtime per… | 90% | 99% | 99.9% | 99.99% | 99.999% | 99.9999% | 99.99999% |

|---|---|---|---|---|---|---|---|

Day | 2.4 hours | 14 minutes | 1.4 minutes | 8 seconds | 0.8 seconds | 0.08 seconds | 0.008 seconds |

Week | 16 hours | 1.6 hours | 10 minutes | 1 minute | 6 seconds | 0.6 seconds | 0.06 seconds |

Month | 73 hours | 7 hours | 44 minutes | 4 minutes | 26 seconds | 2.6 seconds | 0.26 seconds |

Year | 36 days | 3.6 days | 8.7 hours | 52 minutes | 5 minutes | 31 seconds | 3 seconds |

By the time you’re at seven nines of reliability (99.99999%), you’re only allowed 3 seconds of downtime per year. Any additional nines beyond that means you’re down for just microseconds per year, which is exceptionally difficult to achieve. For reference, the AWS EC2 SLA and Google Cloud Compute SLA targets four nines (99.99%), while the Azure SLA targets three nines (99.9%), and these three cloud providers likely invest more in the reliability of their services than you ever could, so targeting more than four or five nines is not realistic for most use cases.

The second reason 100% availability isn’t a reasonable goal is that, beyond a certain number of nines, your customers can’t tell the difference. For example, if your customer is on a mobile network that provides two nines (99%) of reliability, they won’t be able to tell the difference between a service that provides three nines (99.9%) or four nines (99.99%) of reliability, so the extra cost is unwarranted.

The third reason 100% availability isn’t a reasonable goal is that, to achieve extraordinarily high reliability, you have to stop all new development. This is because code changes and deployments are the most common source of outages (refer back to Table 12), so if you want a service to be perfectly stable, you will essentially have to stop making changes to it. Your customers and developers may want high reliability, but probably not at the cost of you never shipping new features again.

In practice, your reliability goal will need to be a balance between what your customers can tolerate in terms of downtime, what your customers want in terms of new feature development, and what your business can provide at a reasonable cost.

Business metrics

Business metrics are a measure of what your users are doing with your product, such as page views, ad impressions, sales, installs, and so on. There are many tools to track business metrics, including Google Analytics, KissMetrics, MixPanel, PostHog, Fathom Analytics, Overtracking, Matomo, Simple Analytics, Cloudflare Web Analytics, Amplitude, and Heap.

The typical audience for business metrics includes the CEO, the product team, and the marketing team, who use these metrics to inform their business decisions. The most basic version of this is to launch new features and products, and see how they impact your business metrics. The more sophisticated approach is to do A/B testing (AKA bucket testing), where you randomly split users into buckets, where each bucket sees a different version of the product, and you track which version improves your business metrics the most (this is often done using feature toggles, which you first saw in Part 5). For example, you could randomly split your users into two buckets, a bucket A with a new feature enabled, and a bucket B with a new feature disabled, and compare how the users perform at key metrics across the two buckets. Did the new feature increase engagement? Downloads? Purchases? Referrals?

This is just like a scientific experiment, with control and experimental groups. As long as (a) you randomly assign users to buckets, (b) the only difference between the buckets is the new feature, and (c) you gather enough data for it to be statistically significant,[39] then you can be reasonably confident that any difference in metrics between the buckets is due to the new feature. In other words, you are using data to establish a causal relationship! This is sometimes called data-driven product development, and if you have the type of product where you can do it (i.e., you can show users different versions of the product, and you have sufficient traffic to generate statistically significant results), it can be transformational.[40]

Application metrics

Underneath the business metrics are the application metrics, which measure what your software is doing under the hood to power your business, including server-side metrics such as what URLs users are hitting, what response codes they are getting, how long your servers are taking to respond (latency), and how much traffic your servers are handling (throughput), and client-side metrics such as page load times, JavaScript errors, user location, browser type, and screen resolution. To gather server-side metrics, you typically use application performance monitoring (APM) tools such as Datadog, New Relic, Dynatrace, AppDynamics, Amazon CloudWatch, Google Cloud Monitoring, and Azure Monitor. To gather client-side metrics, you typically use real user monitoring (RUM) tools, such as DataDog RUM, New Relic Browser Monitoring, Dynatrace RUM, AppDynamics Browser RUM, Sematext Experience, Akamai mPulse, and Raygun RUM.

If you only have the resources to monitor a handful of metrics, you may wish to focus on four metrics that are sometimes called the four golden signals:

- Latency

-

Latency is a measure of how long it takes your application to service a request. An increase in latency is often an early indicator of problems, such as your servers being overloaded. High latency also has an adverse effect on your users. Your service may be "up" (the availability metrics look OK), but if pages take too long to load, customers may abandon your product.

- Traffic

-

Traffic is a measure of how much demand is on your software, typically measured in requests per second (rps) (AKA queries per second [QPS]). A sudden increase in traffic can overload your systems and lead to problems; a sudden drop in traffic can be an early indicator that something is wrong, such as users not being able to access your service due to a DNS misconfiguration.

- Errors

-

Errors are a measure of what percent of requests fail. Failures may be visible to users (e.g., a 500 response) or hidden from users (e.g., a connection to a database fails at first, but then succeeds on a retry), but either way, you want to track any problems that come up while serving a request.

- Saturation

-

Saturation is a measure of how much demand is on your hardware, typically measured as a percent utilization of some resource: e.g., CPU, memory, or disk utilization percentage. Beyond a certain saturation threshold, system performance usually degrades, leading to increased latency or possibly outages. For example, if your CPU usage is pegged above 90%, this may be a sign that the CPU is thrashing, using up most of its cycles context switching between threads, rather than doing productive work, which can drastically increase latency; if your disk space usage is above 90%, that may be a sign you need to free up space immediately, or you may have an outage when disk space runs out.

Note that the last item, saturation, is not an application metric, but a server metric, as discussed in the next section.

Server metrics

Below your application code, you get down to server metrics, which measure the underlying hardware, including metrics such as CPU usage, memory usage, hard drive usage, and network traffic. This is the space of infrastructure monitoring tools. Many of the APM tools from the previous section (e.g, Datadog, New Relic) can also measure server metrics; if you deploy to the cloud, the monitoring tools from the cloud providers (e.g., CloudWatch, Google Cloud Monitoring) may provide you with many of the server metrics you need. There are also tools that specialize in infrastructure monitoring, especially for on-prem deployments, including Nagios (one of the first open source monitoring tools), Icinga, Zabbix, Domotz, and Sensu.

Team metrics

Team metrics are metrics related to your software development process, such as build times, open pull requests, unresolved bugs, and code coverage. There are many sources of team metrics, including your version control system (Part 4), CI servers (Part 5), automated testing and code coverage tools (Part 4), issue tracking systems (e.g., Jira, Linear, GitHub Issues, Asana), code quality tools (e.g., Codacy, SonarQube, Code Climate, Coverity, Snyk), and developer productivity tools (e.g., Pluralsight, Faros AI, LinearB, Swarmia, Oobeya).

If you only have the resources to track a handful of team metrics, the DORA metrics you saw in Table 2 are a good starting point: deployment frequency, lead times, change failure rate, and recovery time. If you can improve these metrics within your team, you can considerably accelerate your software development process.

Now that you’ve seen all the different types of metrics, let’s talk about how you can actually use them.

Using Metrics

To make use of all the different kinds of metrics you just saw, there are three steps:

-

Collecting metrics (instrumentation)

-

Storing metrics

-

Visualizing and analyzing metrics

Some monitoring tools are designed to do all three of these steps, while others are focused on just one or two. The following sections will take a brief look at each of these steps, starting with how to collect metrics.

Collecting metrics (instrumentation)

The first step with metrics is to instrument your servers and the software on them to capture and expose important metrics. Depending on the type of metrics you are trying to capture, there are several different ways to do this:

- Availability metrics

-

Most availability checking apps (e.g., Pingdom, Uptime Robot, Route 53 Health Checks) have built-in support for collecting availability metrics.

- Business metrics

-

To collect business metrics, you have to instrument your code, especially on the client-side. For example, to use Google Analytics, you add a JavaScript library from Google to every page on your website, and that library will automatically gather data about every page view, click, scroll event, and so on. You can also record custom metrics when important actions happen: for example, when a user completes a purchase, you could add JavaScript code that uses the Google Analytics library to record a custom metric with the ID of the product.

- Application metrics

-

To collect application metrics, you have to instrument your code on both the server-side and client-side. For server-side metrics, you can usually get some degree of automatic instrumentation by installing an agent on each server, or as a sidecar for each container. To get more detailed instrumentation, and to be able to customize the metrics you send, you can also install a language-specific library. For example, if you’re using Datadog as an APM, you can install the Datadog Agent to get automatic instrumentation, and if your application is in Java, you can use the dd-java-agent.jar library to record custom metrics. For client-side metrics, you usually run a RUM library on the client: e.g., you might run the DataDog RUM JavaScript library in the web browser or the DataDog Mobile RUM library in your mobile apps.

- Server metrics

-

To collect server metrics, you typically need to install an agent on each server. One option is an agent designed for specific monitoring software, such as the Nagios Agent or Zabbix Agent. Another option is to use a platform-agnostic agent that can work with multiple monitoring tools, such as collectd, Fluent Bit, StatsD, Telegraf, and Beats. Finally, if you’re using the cloud, the cloud providers typically have their own agents built into the hypervisor, so you can get some degree of server metrics out-of-the-box, without having to install anything.

- Team metrics

-

To collect team metrics, you typically rely on the tools you are using: e.g., Jira’s built-in metrics, Codacy’s built-in metrics, etc.

Having to run so many different tools and agents to collect metrics is inefficient and makes it harder to migrate between vendors. This led to the creation of OpenTelemetry (OTel), an open source, vendor-neutral toolkit for collecting telemetry data: that is, metrics, logs, and traces (you’ll learn more about traces later in this blog post). OTel supports most major programming languages, many orchestration tools (e.g., Kubernetes, Lambda), and many metrics backends (a topic you’ll learn about in the next section), and it is emerging as the de facto standard for instrumenting your code, so I usually recommend using it over proprietary tools, especially for collecting application and server metrics.

After instrumenting your code to expose metrics, you need to store those metrics somewhere, as per the next section.

Storing metrics

All the metrics you gather must be stored in a specialized data store, a metrics backend, that can handle the following:

- Storing a large volume of data

-

Every service, server, and container in your infrastructure may be sending metrics data constantly, possibly even multiple metrics for every single request you process, so the data store needs to be able to handle a large volume of writes.

- Storing time-series data

-

Metrics data is a type of time-series data, where you are recording a number of data points, each with a timestamp, and you typically will need to store it in such a way that you can later slice and dice that data by time.

- Storing data to support analysis

-

The point of storing metrics data is so that you can extract insights from that data, so the data store needs to support the ability to analyze the data in a number of different ways (you’ll see more about this in the next section).

Typically, you use a specialized columnar database (as you saw in Part 9) to store metrics data. Some of these data stores are proprietary to the various monitoring tools you saw earlier: e.g., Google Analytics and Datadog use their own data stores under the hood. There are also some metrics backends that can be used with many other tools (e.g., with various visualization and analysis tools, as per the next section), including Open Time Series Database (OpenTSDB), Prometheus, Timescale, InfluxDB, Graphite, and QuestDB.

Once the metrics are stored somewhere, the final step is to make use of them through visualization and analysis, as discussed in the next section.

Visualizing and analyzing metrics

The three most common things you do with metrics data are the following:

- Visualize and analyze the data

-

The first step is to slice and dice the data to find the information you want. For example, you might want to see the average latency for a specific service over the last hour, or you may want to see how many unique visitors your website got last month. To make sense of the data, you typically need to visualize it in the form of graphs, line charts, histograms, scatter plots, heat maps, etc. Many of the monitoring tools you read about earlier (e.g., Google Analytics, Datadog) have built-in user interfaces to do this sort of analysis and visualization. There are also a handful of tools that can work with multiple metrics data stores, including Grafana, Perses, and Kibana / OpenSearch Dashboards.

- Create dashboards and reports

-

Once you have sliced and diced the data to find visualizations you like, you can collect the most important ones into a dashboard. This gives you a single page where, at a glance, you can get a sense of the health of your systems. Many teams have dashboards up on big screens around the office, and you check them regularly to make sure things are working. You can also create reports from the data, which are documents you might share with your executive team, product team, or investors to help inform business decisions.

- Configure alerts

-

No one can stare at a dashboard all day long, so you usually configure alerts that notify you of problems. You’ll learn more this later in this blog post.

|

Key takeaway #4

Collect multiple types of metrics (availability, business, application, server, team) and build dashboards to focus on the most important metrics to your business. |

Now that you’ve seen how to use metrics, let’s actually try it out by going through an example of metrics in the real world with CloudWatch.

Example: Metrics in CloudWatch

In this section, you’ll see an example of how to use CloudWatch, which is a monitoring tool built into AWS, to get metrics for EC2 instances, ASGs, and ALBs. Out-of-the-box, CloudWatch provides application and server metrics for most AWS resources; you’ll also deploy Route 53 health checks to get availability metrics. Finally, you’ll gather all these metrics in a custom CloudWatch dashboard.

|

Example Code

As a reminder, you can find all the code examples in the blog post series’s sample code repo in GitHub. |

To get started, head into the folder you’ve been using for all the examples in this blog post series, and create a new ch10/tofu/live folder:

$ cd fundamentals-of-devops

$ mkdir -p ch10/tofu/liveNext, copy your asg-sample module from Part 3 into the tofu/live folder:

$ cp -r ch3/tofu/live/asg-sample ch10/tofu/live

$ cd ch10/tofu/live/asg-sampleThe asg-sample module deploys an ASG with an ALB in front of it. It’s configured to deploy an AMI built from the

Packer template in ch3/packer. If you still have that AMI ID, you can reuse it; if not, see the Packer section of

Part 3 for how to build it again. Copy the ID of the AMI into the ami_id parameter in main.tf

of the asg-sample module.

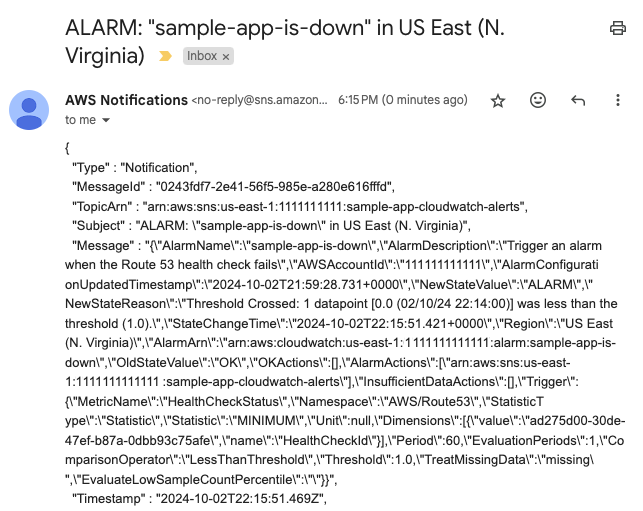

Next, add a Route 53 health check in main.tf, as shown in Example 189:

resource "aws_route53_health_check" "example" {

fqdn = module.alb.alb_dns_name (1)

port = 80 (2)

type = "HTTP" (3)

resource_path = "/" (4)

request_interval = "10" (5)

failure_threshold = 1 (6)

tags = {

Name = "sample-app-health-check" (7)

}

}This code configures a Route 53 health check that will test your service from multiple endpoints around the world, with the following configuration:

| 1 | Send health check requests to the ALB domain name. |

| 2 | Send the requests to port 80. |

| 3 | Send HTTP requests. |

| 4 | Send HTTP requests to the "/" path. |

| 5 | Send requests every 10 seconds. |

| 6 | If the ALB fails even one check, it will be considered as down. |

| 7 | Give the health check the name "sample-app-health-check." |

Next, you can create a CloudWatch dashboard using a module called cloudwatch-dashboard, which is in the

blog post series’s sample code repo in the ch10/tofu/modules/cloudwatch-dashboard folder.

This module creates a dashboard that highlights a few specific metrics for the ASG, ALB, and Route 53 health checks.

Example 190 shows how to update main.tf to use the cloudwatch-dashboard module:

cloudwatch-dashboard module (ch10/tofu/live/asg-sample/main.tf)module "cloudwatch_dashboard" {

source = "github.com/brikis98/devops-book//ch10/tofu/modules/cloudwatch-dashboard"

name = "sample-app-dashboard" (1)

asg_name = module.asg.asg_name (2)

alb_name = module.alb.alb_name (3)

alb_arn_suffix = module.alb.alb_arn_suffix

health_check_id = aws_route53_health_check.example.id (4)

}The preceding code configures the dashboard as follows:

| 1 | Name the dashboard "sample-app-dashboard." |

| 2 | Pass the name of the ASG to the module so it can highlight some metrics for the EC2 instances in that ASG. |

| 3 | Pass the name of the ALB, plus a unique ID from the ALB’s ARN, to the module so it can highlight some metrics for the load balancer. |

| 4 | Pass the ID of the Route 53 health check to the module so it can highlight some metrics for these health checks. |

You should also add the dashboard URL as an output variable in outputs.tf, as shown in Example 191:

output "dashboard_url" {

description = "The URL of the dashboard"

value = module.cloudwatch_dashboard.dashboard_url

}Deploy as usual, authenticating to AWS as described in Authenticating to AWS on the command line, and running init and

apply in the asg-sample folder:

$ tofu init

$ tofu applyIt will take 3-10 minutes for the ASG to deploy and for the apps to boot up, so be patient. When everything is done, you should see two output variables:

alb_dns_name = "<ALB_DOMAIN_NAME>"

dashboard_url = "<DASHBORD_URL>"Test the URL in the alb_dns_name output to check the app is working (make sure to use HTTP, as this app from back

in Part 3 does not support HTTPS):

$ curl http://<ALB_DOMAIN_NAME>

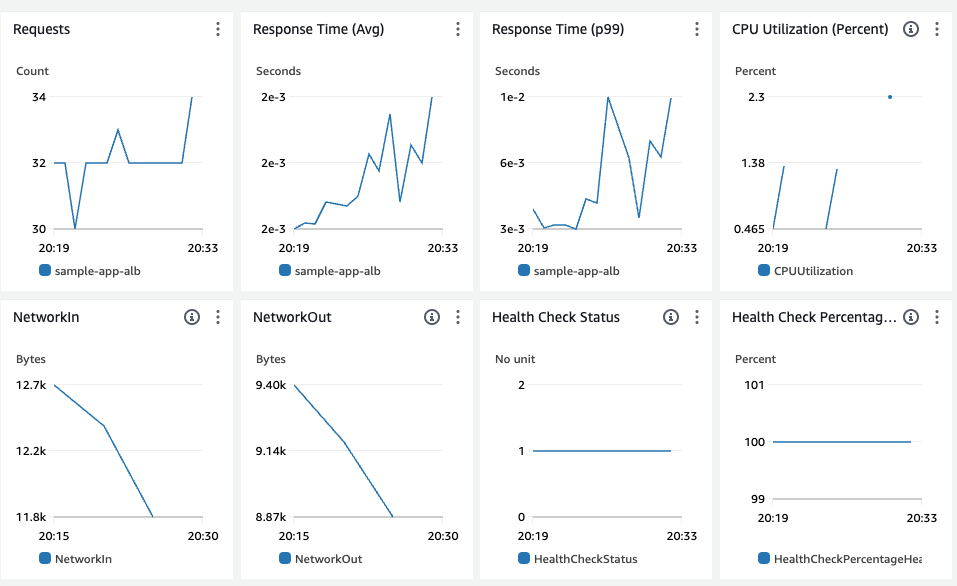

Hello, World!If you see the usual "Hello, World!" text, that means the app is working. Next open up the URL in the dashboard_url

output variable in your web browser. This should take you to a CloudWatch dashboard that looks similar to

Figure 110:

If the dashboard is empty, wait a few minutes for the Route 53 health checks to make requests to your app, and you should start seeing the following metrics:

-

The number of requests to your ALB.

-

The average response time from your ALB.

-

The p99 (99th percentile) response time from your ALB: that is, 99% of requests are handled in this amount of time or less.

-

The average CPU utilization across the ASG.

-

The amount of network traffic that came into the ASG.

-

The amount of network traffic that came out of the ASG.

-

The Route 53 health checks: 1 means it’s passing, 0 means it’s failing.

-

The percentage of Route 53 health checks that are passing.

Congrats, you’re now seeing a small set of useful application, server, and availability metrics! If you want to see all available metrics in CloudWatch, head over to the CloudWatch metrics dashboard; if you want to see more info on the availability metrics, head over to the Route 53 health checks dashboard.

|

Get your hands dirty

Here are a few exercises you can try at home to go deeper:

|

When you’re done testing, you may wish to run tofu destroy to clean up your infrastructure, so you don’t accumulate

any charges. Alternatively, you may want to wait until later in this blog post, when you update this

example code to enable alerts in CloudWatch. Either way, make sure to commit your latest code to Git.

Let’s now move on to the next section, where we’ll discuss structured events, and how they compare to other types of monitoring you’ve seen so far.

Events

In Part 9, you learned about event-driven architectures, where each of your services can publish events into an event stream, and these events can be consumed and processed by one or more other services. One especially useful way to consume events is for the purposes of monitoring, including the following:

-

Observability

-

Tracing

-

Testing in production (TIP)

The following sections will dive into each of these, starting with observability.

Observability

As your architecture becomes more complicated, and it evolves along the lines you saw in Section 1.1.2—e.g., you move from a monolith to microservices, from single-node systems to distributed systems, and so on—two things will start to happen:

-

You’ll hit more and more problems that you never predicted or anticipated.

-

The ratio of unpredictable problems to predictable problems will get worse.

By the time you get to the scale of massive companies like Google and Facebook, you’ll constantly be dealing with problems for which you don’t have, and never would’ve thought to have, automated tests, metrics, or dashboards. While you can anticipate that it’s worth monitoring the four golden signals (latency, traffic, errors, and saturation) in almost any company, what do you do for dealing with complex, unanticipated, and even unimaginable problems? How do you deal with the unknown unknowns? This is where observability comes into the picture.

Observability is a property of a system: in control theory, it’s a measure of how well the internal states of that system can be inferred from knowledge of its external outputs; in DevOps, it’s a measure of how well you can understand any state your software may have gotten itself into solely by interrogating that software with external tools. That means you can figure out what caused a problem, no matter how weird or unpredictable, without shipping new code, but solely through an ad hoc, iterative investigation of monitoring data. If the only data you have access to is the metrics you gathered (because you could anticipate they would be useful), then when facing novel problems, your investigations would quickly hit a brick wall. This is why companies are starting to turn to structured events.

Structured events are essentially hashmaps your service publishes that contain interesting key-value pairs describing what that service is doing. This may sound familiar: the events from the event-driven architectures you read about in Part 9 are structured events; the structured logs from earlier in this blog post are structured events; and you could even publish all the types of metrics you read about earlier in this blog post as structured events. The idea is to instrument your services to publish structured events that contain all the interesting data about that service and to set up tooling to consume these events, and store them in such a way that you can efficiently analyze what’s happening in your system. What sort of tooling is that?

Note that structured events have many dimensions (many key-value pairs): e.g., what time the event happened, what service the event happened in, what host the service was running on, the ID of the user who was accessing that service, what response code the user got from the service, how long it took to process the request, and so on. Moreover, many of these dimensions have a high cardinality, which is a measure of how many distinct values a dimension can have. For example, the user ID dimension could be set to millions of different values, depending on how many users you have in your system. So you need tooling that can efficiently store and analyze data with high dimensionality and cardinality.

I haven’t found a widely-accepted name for this sort of tooling—I’ve heard "observability platform," "application visibility platform," "software debugging tool," and others—so for the purposes of this book, I’ll use the term observability tooling specifically to refer to tools for storing and analyzing structured event data with high dimensionality and cardinality. Honeycomb released one of the first publicly-available observability tools in 2016 and did a lot of the leg work to popularize the concept of observability. Since then, other observability tools have appeared on the market, such as SigNoz, ServiceNow Observability (formerly LightStep), Chronosphere, OpenObserve, and IBM Instana, and many existing monitoring tools have started to add observability features (or at least, to use "observability" in their marketing), such as Dynatrace, New Relic, and Datadog.

The ability of observability tools to efficiently slice and dice data with high dimensionality and cardinality is what allows you to iteratively interrogate your systems without knowing in advance what questions you’ll need to ask. For example, the following shows the steps of a sample debugging session using an observability tool to iteratively figure out the cause of latency spiking on your website:

-

You select the part of the latency chart that shows the spike to see what the slow requests have in common versus the baseline. This reveals that just about all the higher latency corresponds to a single URL.

-

You filter the chart to just the single URL to see what is different when latency is high versus low. You discover all the slow requests correspond to a single user ID.

-

You zoom in on a single page load of when that URL was slow for that user ID. This shows that 5 service calls were made to process this request, and that 1 of those service calls represents 90% of the latency. Note that the ability to "zoom in" on a single page load and correlate all the underlying service calls is possible because all the structured events emitted by those services include additional context (e.g., a request ID).

-

You zoom in on the slow service call to see the database queries that service call made. This reveals one query that will be exceptionally slow for certain users.

While you can set up metrics and alerts ahead of time for something like latency, there’s no way to know ahead of time all the queries you needed to do in the debugging session. And even if you added metrics and dashboards for some of those queries, in a complicated architecture, the next issue you have to debug might require a bunch of novel queries again. This sort of iterative search process is where observability tools shine, as they give you the ability to find answers when you don’t know, and can’t know, what questions you’ll have to ask ahead of time.

|

Key takeaway #5

Instrument your code to publish structured events. Use observability tools to understand what your software is doing by performing iterative, ad hoc queries against these structured events. |

Another class of tools that falls under the observability umbrella are tracing tools, as discussed in the next section.

Tracing

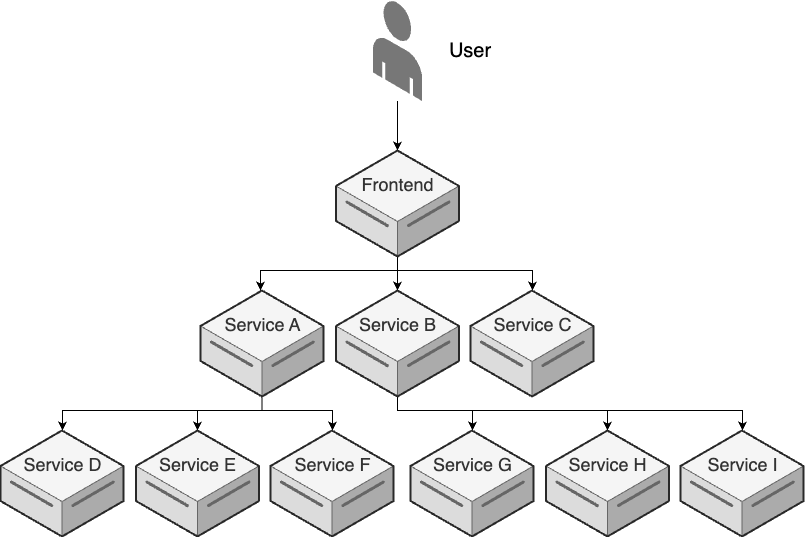

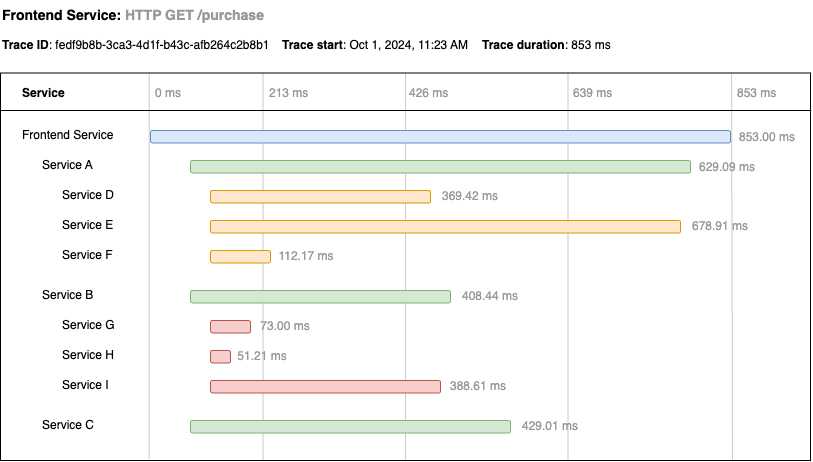

When you have an architecture with many services, a single request from a user may result in dozens of internal requests within your architecture. For example, when your frontend service receives a request, it may send requests to services A, B, and C; service A, in turn, may send requests to services D, E, and F, while service B sends requests to services G, H, and I; and so on, as shown in Figure 111.

The diagram in Figure 111 is static, but in the real world, your services and request flows are going to be changing all the time, and beyond a certain scale, understanding the request flow, and debugging problems within it, can become a serious challenge. To solve this problem, many companies turn to distributed tracing, which is a method of tracking requests as they flow through a distributed system.

The basic idea is that when you get the request that initiates the flow, such as a user’s request to a frontend, you instrument your code to assign a unique ID to that request, called a trace ID, and you propagate that trace ID throughout all the downstream requests. Each of your services can then publish structured events that include the trace ID, along with other useful data such as a timestamp and how long the request took to process, and a distributed tracing tool can stitch all these events back together. That way, for every request, you can get a dynamically-generated diagram similar to what you saw in Figure 111, or a waterfall diagram similar to Figure 112:

Waterfall diagrams consists of a series of nested spans, one for each request, which show you which services were involved, the order of requests (which reveals dependencies between services), how long the requests took, and other useful metadata. These sorts of diagrams help with debugging problems and performance tuning in a microservices architecture.

There are a number of tools available for instrumenting your code and collecting and analyzing distributed traces. Some of the early players in the space were Zipkin, Jaeger, Honeycomb, and LightStep, which was acquired by ServiceNow and is now known as ServiceNow Observability. Later on, many other distributed tracing tools have appeared on the scene, and many existing monitoring tools added varying levels of support for distributed tracing as well, including SigNoz, Grafana Tempo, Uptrace, Dynatrace, New Relic, Datadog, AWS X-Ray, and Google Cloud Trace. These days, using OTel is often the best approach, as it’s open source, works with many different programming languages, orchestration tools, and metrics backends, and it’s a single toolkit you can use to collect all your telemetry data (i.e., metrics, logs, and traces). Also, as you saw in Part 7, some service mesh tools provide distributed tracing out-of-the-box, such as Istio, which uses OTel under the hood.

|

Key takeaway #6

Use distributed tracing to visualize the path of requests through your microservices architecture. |

You’ve now seen a number of tools for understanding what’s happening in production. If you get good enough with these tools, that opens the door to an approach known as testing in production, which is the focus of the next section.

Testing in Production (TIP)

Usually, testing checks a very strong notion of correctness on a few cases, and monitoring checks a very weak notion of correctness under the real production load.

One way to think of monitoring is as the yin to the automated testing yang. That is, you use automated tests to catch some types of errors, and you use monitoring to catch other types. Automated testing is great for catching errors when (a) you can anticipate the error and (b) you can simulate production sufficiently to replicate the error. As you saw in the observability section, as your company scales, you’ll hit more and more errors that you can’t anticipate. Similarly, as your company scales, you’ll find it harder and harder to simulate production. If you ever get to the scale of a Google or Facebook, where you have billions of users and petabytes of data, there’s just no practical way to have a faithful copy of production.

As a result, some larger companies don’t bother. That is, they don’t have staging environments. Instead, they do what has been called testing in production (TIP), where you test your software by deploying it to production, and seeing what happens. This may sound reckless and dangerous, and if not done correctly, it can be. However, the reality is that we all test in production to some extent: every deployment, every configuration change, every single user request is a test. It might work, or might hit a bug. The idea with TIP is that, at a certain scale, the effort to do automated testing in pre-production environments becomes so high, that you may actually be better off investing that effort into making your systems more resilient. Instead of spending thousands of hours trying to catch every bug in testing, you spend that time reducing the impact of bugs.

This doesn’t mean you throw all automated testing out the window, and just thoughtlessly start tossing untested code into production while screaming Leeeeeeerooooooy Jenkins. If anything, TIP requires more discipline to do well, including the following:

- Automated testing

-

You still need plenty of automated tests to try to catch issues before they hit production, just as you saw in Part 4, including static analysis, unit tests, integration tests, etc. However, at scale, you replace most end-to-end tests with TIP.

- Automated deployments

-

In order to test in production, your deployment process must be completely automated, as you saw in Part 5, and fast, so you can roll back or forward quickly in case of problems. Ideally, you do canary deployments as well, to limit the blast radius in case things go wrong.

- Metrics

-

As you saw earlier in this blog post, you should be keeping track of key metrics (e.g., the four golden signals) and ensuring you have a good baseline for what "normal" looks like. That way, if the code you’re testing in production causes problems, you can spot it quickly, as the metrics will no longer look normal.

- Observability and tracing

-

Testing in production depends heavily on your system being highly observable, and having distributed tracing in place, so if something goes wrong, you can quickly interrogate your system to figure out the cause, and apply a fix.

- Feature toggles, ramping, and A/B tests

-

One of the key ideas with testing in production is that you don’t test with all users. Instead, as you saw in Part 5, you limit the blast radius by wrapping each new piece of functionality in a feature toggle, so it’s off by default, and you turn it on only for a small percentage of users, perhaps just company employees initially, and if the feature works well, then you gradually ramp it up, starting with a tiny percentage of users, and increasing the percentage over time so long as you don’t hit problems. Moreover, you may wish to A/B test your new code versus the original code, which requires not only that you’re gathering metrics, but that you can also slice and dice your metrics based on the feature bucket (A or B) the user was in. That way, you’re not only testing that your code works, but also that it produces better outcomes for your customers and your business, which is arguably the most important test you can do in production!

If you do TIP correctly, you’ll see significant improvements in all your DORA metrics. Deployment frequency will go up and lead times will go down, as deploying will be something every team does dozens or hundreds of times as part of their normal coding process, rather than only at the end. Also, change failure rates and recovery time will go down, as your tooling will be designed to limit the blast radius and quickly recover from errors.

That said, testing in production is not without risks and challenges. For each feature you work on, you will constantly need to balance how much time you invest in testing before production versus how much testing you want to do in production. When the cost of bugs is high, you’ll want to lean more heavily towards automated testing before production, such as for the following types of features:

- Security features

-

If you get authentication, authorization, or encryption wrong, you can put your customer data and your entire business at risk.

- Financial features

-

Getting financial transactions wrong, such as accidentally charging a customer multiple times or zero times for what should be a single transaction, can be very costly (literally) for your business.

- Data storage features

-

A risk with data storage is that you accidentally corrupt or delete your data. With a good backup strategy, as you saw in Part 9, it’s possible to recover from such accidents, but not without an outage and loss of customer trust.

- Features where human lives are at risk

-

If you are building medical software, or autopilot, or anything else where human lives are at risk, every single bug can lead to tragedy and tremendous loss.

For these sorts of features, do as much testing before production as you possibly can. On the other hand, when the cost of bugs is low, you’ll want to lean more heavily towards testing in production. For example, if an employee at Facebook is testing in production, and that leads to a bug that breaks the news feed for a small number of users, it’s just not that big of a deal. For this very reason, Facebook’s motto used to be "move fast and break things." They eventually changed this motto to "move fast with stable infra," which in some ways, does an even better job of encapsulating the TIP philosophy of speed and resiliency.

That said, things will go wrong even with the most resilient systems, and when they do, you’ll want to be alerted about it. This is the focus of the next section.

Alerts

Logs, metrics, and events are useful for understanding the cause of an incident, but how do you find out that an incident happened in the first place? You probably don’t want to rely on a developer just happening to look at the right metric, and you definitely don’t want to wait for your users to complain. Instead, just about all the logging, metrics, and events tools you saw earlier in this blog post allow you to configure alerts that notify you of problems. In the next several sections, we’ll go through the following topics related to alerts:

-

Triggers

-

Notifications

-

On-call

-

Incident response

Let’s start by looking at what sorts of events can trigger an alert.

Triggers

The idea with alerts is to define triggers or rules for when a notification should be sent to someone. You typically define triggers in terms of various metrics, events, or logs exceeding one of the following types of thresholds:

- Absolute threshold

-

Absolute thresholds are based on specific, concrete values. Example: trigger an alert when the average latency exceeds 500 ms. These are usually the first type of trigger you turn to, as they are easy to understand and supported in virtually all monitoring tools. They are especially useful for metrics where there are unambiguous "bad" values, such as free disk space dropping to zero. However, not all metrics are this clear. For example, what’s a "bad" value for latency may depend on the product, the time of day, the time of year, and many other factors that may change as your business grows. If you have a good understanding of what "normal" is for your metrics, you can use absolute thresholds, but it’s easy to get these wrong and end up with a lot of false positives, which are alerts that go off even though there isn’t anything wrong. This can quickly lead to alert fatigue (a topic you’ll learn more about later in this blog post), so you’ll also need to use the other two types of thresholds discussed next.

- Relative threshold

-

Relative thresholds are based on comparison to a baseline value. Example: trigger an alert when latency increases by more than 50%. Relative thresholds don’t require you to specify a single, absolute value for "normal." Instead, they help you catch spikes, which in certain cases, can be a clear sign of problems.

- Historical threshold

-

Historical thresholds are based on comparisons to the same metric at a comparable previous point in time. Example: trigger an alert if the latency today is 50% higher than the latency at the same time last week. Historical thresholds are especially valuable for business that have repeated traffic patterns, such as seasonal fluctuations in an ecommerce businesses (e.g., traffic spikes around the holidays).

Once you’ve defined a bunch of triggers, the next question is what to do when a trigger goes off. Whenever possible, the default action should be to deal with it using automation, such as auto healing (e.g., replace a server or container) or auto scaling (e.g., scale the number of servers or containers up or down). If you can’t handle a trigger automatically, the next step is to notify a human, as discussed in the next section.

Notifications

Although this entire section is about alerts, the reality is that you should use alerts sparingly. I’m specifically referring to the kinds of alerts that notify a person, interrupting whatever they are doing, such as an alert on a pager or, as is more common these days, a smartphone. Alerts of this kind have a significant cost. First, they interrupt work. I’ve seen some companies where alerts go off all the time, so their operations team never has time for anything other than "fire fighting." Second, they interrupt personal life. Since alerts may come up at any time—in the middle of dinner, in the middle of the night, or during your Christmas holiday—they can significantly interfere with your personal life.

If alerts go off too often, your team will experience alert fatigue, where they will become stressed, unproductive, and unhappy. In the best case, they’ll start to ignore alerts, like in The Boy Who Cried Wolf parable. In the worst case, your team members will burn out and leave. To avoid alert fatigue, you should only use an alert if the trigger meets all the following criteria:

- It’s actionable

-

There’s no point in alerting a person if they can’t do something about the alert.

- It’s important

-

Even if it’s actionable, there’s no point in alerting a person if it isn’t important. For example, CPU usage being higher than normal probably doesn’t merit an alert, whereas an outage of your entire product probably does.

- It’s urgent

-

Even if it’s actionable and important, you shouldn’t alert someone unless it’s urgent and must be dealt with immediately. Think of alerts as a signal to your team to drop everything, and investigate immediately. For most problems, it’s OK to wait a few hours, so alerts should only be used when waiting will cause significant harm to your customers or your business.

If a trigger doesn’t meet all of these criteria, then instead of an alert, you should send a non-urgent notification, such as filing a ticket in an issue tracking system or sending a message to a chat room (e.g., in Slack or Teams) or mailing list that your operations team checks from time-to-time. That way, you know someone will see and investigate the issue, without forcing them to drop everything to do it.

|

Key takeaway #7

Use alerts to notify you of problems, but only if those problems are actionable, important, and urgent. |

If a trigger does meet all of these criteria, then you send an alert, typically to the team members who are on-call, as per the next section.

On-Call

When you’re a tiny startup, if there’s an incident, you might alert everyone. As you grow, this becomes inefficient and counter-productive, so it’s more common to have an on-call rotation, where you create a schedule that assigns specific team members to be responsible for responding to alerts at specific times. In the past, you might have managed the on-call rotation with a spreadsheet and had the on-call team members carry pagers, but these days, it’s more common to use dedicated software to manage the rotation and send alerts to smartphones. The major players in this space include PagerDuty, Splunk On-Call (formerly known as VictorOps), Opsgenie, Blameless, Squadcast, All Quiet, and Rootly.

Being on-call is stressful, so here are a few practices to consider:

- Measure toil

-

Toil is work that is manual, repetitive, reactive, and temporary in nature, as opposed to engineering, where you proactively automate something to create a reusable, long-term solution. For example, if every few days, you have to fix outages due to botched manual deployments, that’s toil. If you then take the time to automate your deployment process, that’s engineering. A good practice is to try to estimate what percentage of time each team spends on toil versus engineering—this can even be a metric you track on a dashboard—and to proactively shift the balance towards engineering. No team should be spending more than ~50% of its time on toil; ideally, you can get toil for all teams below 20%.

- Set an error budget

-

There is often a tension between development and operations teams, where developers want to deploy more often to ship new features, but operations wants to deploy less often, as deployments are the most common cause of outages (see Table 12) that wake operations up at 3 AM. One way to resolve this tension is to establish an error budget, which is one minus the availability you’re targeting: e.g., if you’re targeting 99.9% availability, your error budget is 0.1%, or about 10 minutes per week. The idea is that, as long as you’re staying within your error budget, you deploy as often as you can, and any outages you hit are not seen as failures to avoid, but as an expected part of the development process. If you exceed your budget, then you slow down new feature development, and have everyone invest in making your software more resilient (through the techniques you’ve learned in this blog post series!), until you can consistently stay within your error budget.

- Include developers in the on-call rotation

-

Another way to resolve the tension between development and operations teams is to include developers in the on-call rotation. This is a very quick way to squash the "throw it over the wall" mentality. After devs have been woken up a few times at 3 AM, they’ll start to write their code a bit differently, investing more in automated testing, feature toggles, monitoring, and so on.

- Recognize those who resolved incidents

-

Even if you do all the preceding items, you’ll still have outages, alerts, and stress. One way to ease the pain a little bit is to recognize employees who successfully resolved incidents, especially in response to an alert outside normal work hours. For example, you could call out their efforts in front of their peers, automatically give them a day off after a late night of fire fighting, or even offer a small monetary bonus. It should be just enough to clearly acknowledge that being on call is tough, and that you appreciate the sacrifice, but not so much that you’re incentivizing team members to create incidents (e.g., to earn major bonuses). In other words, team members should still prefer that no incident happened at all, but the recognition should take some of the sting out of it.

|

Key takeaway #8

Use an on-call rotation to deal with alerts, but make sure to keep toil in check, enforce an error budget, include developers in the rotation, and recognize those who resolved incidents. |

If you’re on-call, and an alert goes off, you’ll need to resolve the incident by going through an incident response process, as defined in the next section.

Incident Response